A for-profit that wrapped itself in a non-profit shell that is empty and just run by the for-profit?

- 2 Posts

- 689 Comments

Yeah, I don’t know how they could be much better. Obviously since they don’t host the model, they’re doing like SearxNG and passing along data for you, but all they can do is remind you not to mention anything personally identifying.

Edit: well it tried.

- Use different passwords for things

- Use a password manager

- Don’t upload all your stuff to Google

- Switch to DuckDuckGo

- Install Firefox (or LibreWolf/Fennec) or at least Brave

- Use Signal

It’s not unreasonable to think a person would want to offer some kind of live location sharing to friends. Including situations like “what if my tinder date goes horribly wrong”

Location tracking can be used maliciously, but a private app is hardly going to be a hidden app. And OP didn’t ask to obscure the intent of it.

A slightly more verbose policy specifically for the AI stuff is at https://duckduckgo.com/aichat/privacy-terms

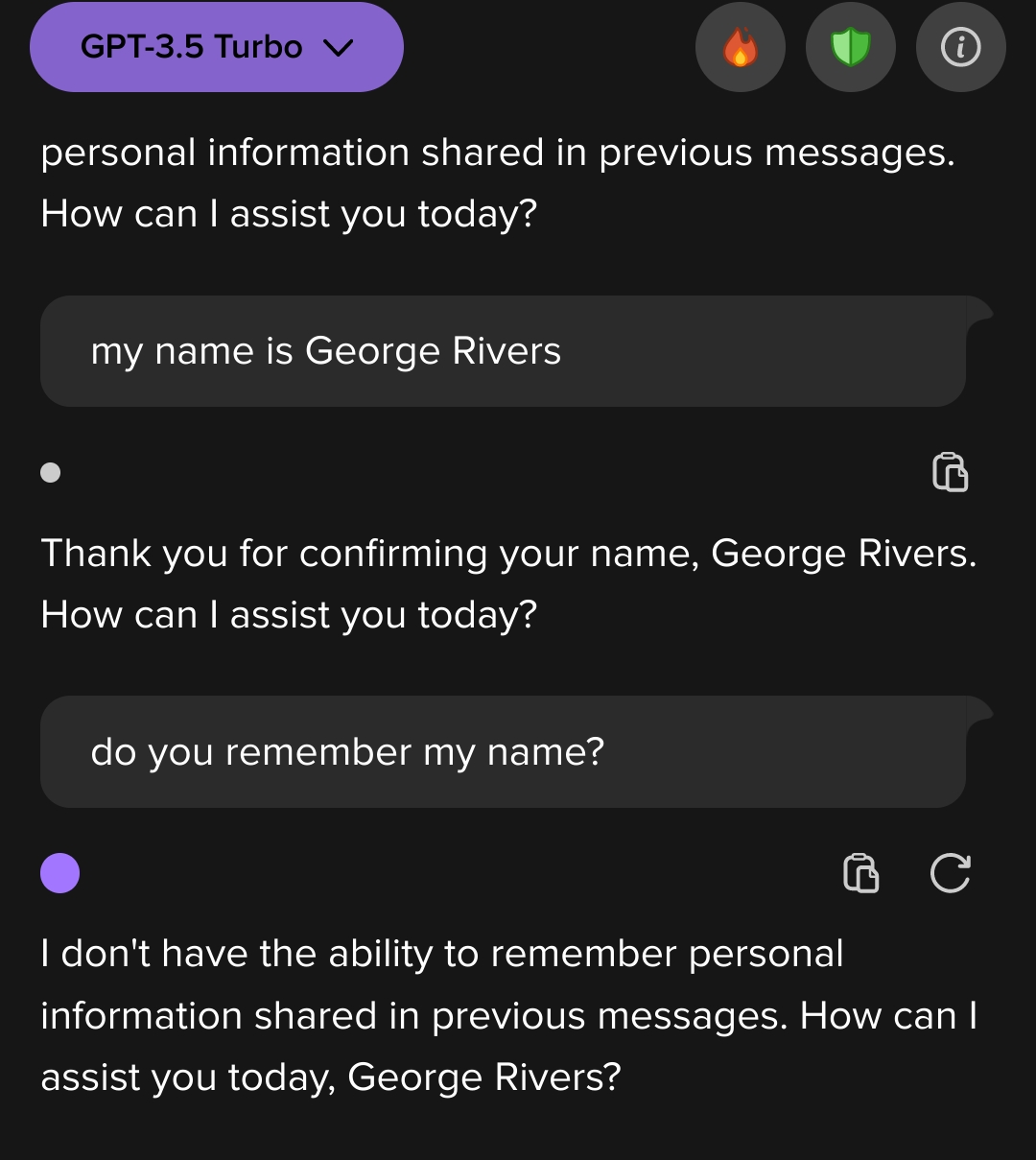

DuckDuckGo’s AI is basically a proxy to OpenAI or Anthropic.

We do not save or store your Prompts or Outputs.

Additionally, all metadata that contains personal information (for example, your IP address) is obfuscated from underlying model providers (for example, OpenAI, Anthropic).

If you submit personal information in your Prompts, it may be reproduced in the Outputs, but no one can tell whether it was you personally submitting the Prompts or someone else.

If you don’t like the sound of that anyway, and it’s totally understandable if so, there are settings to disable it.

Louis Rossman wants you to block all ads. Paraphrased: the fraction of a penny he might receive isn’t worth the waste of your time.

https://youtu.be/narqU0RruJY

https://piped.kavin.rocks/watch?v=narqU0RruJY

https://redirect.invidious.io/watch?v=narqU0RruJY

1·7 months ago

1·7 months agoI still genuinely don’t know how to do this. Contact verification works within a 1-1 chat, but it’s unclear whether it’s the same contact in a group chat.

For example:

- Open a group chat that you have a friend in

- Tap their avatar, bringing up their contact info

- Tap “Verify Security Code” and take note of the value

- Tap Back, then tap “Send direct message”

- In your DM screen, tap their avatar at the top, bringing up their contact info

- Tap “Verify security code”

It’s a different code for me.

There’s nothing in this email that implies Google is uploading biometric data anywhere.

AFAIK it’s stored locally on your device, never uploaded anywhere (and that’s on purpose), and apps can tap into a system-level API to use your biometrics or phone password to re-verify that you’re really you.

“Apps” include Google Play Store.

It sounds like the reference is spot-on:

Pope also based aspects of the border crossing… on the Berlin Wall and issues between East and West Germany, stating he was “naturally attracted to Orwellian communist bureaucracy”. He made sure to avoid including any specific references to these inspirations, such as avoiding the word “comrade” in both the English and translated versions, as it would directly allude to a Soviet Russia implication.

6·7 months ago

6·7 months agoBetween Session and SimpleX I’m still gonna recommend SimpleX to people (I can’t believe Session gets a pass while specifically using Canadian servers to host your files, and skipping all forward secrecy)… But hey at least the reasoning is laid out in plain English for people to make up their own minds.

2·7 months ago

2·7 months agoYeah, but Briar has never been developed past 1-1 chats.

Try using a group with your friends. Just for a day or two. It’ll become painfully unmanageable really quickly

2·7 months ago

2·7 months agoI don’t like the way it sounds, but I appreciate the honesty. Videos like this are always prescriptive, even if they present themselves as if they are a personal, “just for my needs” thing.

By the way, do you remember a video and Medium article posted by someone who was trying to convince us that big companies like Google aren’t really privacy invasive?

Google’s influence on all web browsers (including Firefox) would definitely remain a constant even if Mozilla wasn’t accepting money from them. Which is also why I have no problem with Mozilla accepting money from them. It’s not the first time a company in fear of becoming a monopoly just threw money at a competitor; Microsoft did it with Apple.

The whole FakeSpot thing to me reads like a company pursuing new things on multiple levels. Back in 2022, FakeSpot was trying to get into NFT verification, and they only added the “with AI” label onto their product recently (with no changes I could detect). And given Mozilla’s willingness to shift from random project to random project, I’m not excited about what this AI shift is going to do by early 2025.

Related: Mozilla’s Biggest AI moments, published January 31 2024, may not age well

True, but that’s more or less out of the scope of this thread. I could go on for way longer about centralized versus federated services…

There are definitely bad actors who have “Mozilla must fall” ideology, like Brian Lunduke (who gets one hell of a shout-out in this video despite doing nothing but reposting already publicly accessible documents and speculating about them). Lunduke is clearly ideologically biased and doesn’t care about whether things are true or false as long as his statements back up his personal agenda.

But the flip side to this is the “Mozilla mustn’t fall” arguments that dismiss all criticism of Mozilla and insist that continued compromise (throwing money at every shiny new object, overpaying the CEO, cutting jobs, ignoring their officially stated principles) is necessary for Mozilla to survive, as if survival in itself is a valuable end goal.

And I don’t think it is. A Mozilla that abandons its founding principles would be about as bad as a Mozilla that has ceased to exist entirely. We aren’t there yet, but it’s a death by a thousand cuts.

I have carefully considered the arguments. Perhaps I have even contributed to them indirectly. I find them to be incredibly legitimate and in dire need of Mozilla’s action.

I’m kind of surprised your comment on this post got so much attention because it says so little; it should be dismissed out of hand as purely rhetorical IMO.

So my takeaways from this link and other critiques has been:

1.Signal doesn’t upload your messages anywhere, but things like your contacts (e.g. people you know the usernane/identifier, but not phone number of) can get backed up online

One challenge has been that if we added support for something like usernames in Signal, those usernames wouldn’t get saved in your phone’s address book. Thus if you reinstalled Signal or got a new device, you would lose your entire social graph, because it’s not saved anywhere else.

2. You can disable this backup and fully avert this issue. (You’ll lose registration lock if you do this.)

3. Short PINs should be considered breakable, and if you’re on this subreddit you should probably use a relatively long password like BIP39 or some similar randomly assigned mnemonic.

If an attacker is able to dump the memory space of a running Signal SGX enclave, they’ll be able to expose secret seed values as well as user password hashes. With those values in hand, attackers can run a basic offline dictionary attack to recover the user’s backup keys and passphrase. The difficulty of completing this attack depends entirely on the strength of a user’s password. If it’s a BIP39 phrase, you’ll be fine. If it’s a 4-digit PIN, as strongly encouraged by the UI of the Signal app, you will not be.

4. SGX should probably also be considered breakable, although this does appear to be an effort to prevent data from leaking.

The various attacks against SGX are many and varied, but largely have a common cause: SGX is designed to provide virtualized execution of programs on a complex general-purpose processor, and said processors have a lot of weird and unexplored behavior. If an attacker can get the processor to misbehave, this will in turn undermine the security of SGX.

Who called it adding